The volume of information online is growing relentlessly. Just imagine: every day, users around the world create 500 million tweets, 294 billion emails, 4 million gigabytes of data on Facebook, and 65 million notifications on WhatsApp. At the same time, there is a need for secure storage, fast sharing, and quality analysis of information. Data as a Service, a data management model, is helping to meet this need.

This approach entrusts the process of collecting, storing, integrating, processing, and analyzing data to individual teams. The main advantages and capabilities of the DaaS model are described in this article.

Data as a Service’s Prerequisites

In the early 2000s, many IT companies faced a rapid increase in volumes of data. Among them were such famous corporations as Google, IBM, Oracle, and Microsoft. Professionals were trying to figure out how to get the most business value out of this data flow. The solution did not take long—they decided to sell the results of analytics on a subscription basis.

It is worth noting that in those days there was already a notion of Software as a Service (SaaS) and a family of “as a Service” concepts was created. It included IaaS (Infrastructure as a Service), PaaS (Platform as a Service), as well as the not yet fully-formed DaaS concept as a business model. On the technical side, DaaS is a way to collect, store, process, and analyze data, which is handled by a separate team of Data Engineers and Data Analysts in a company.

At the same time, the corporation had difficulty processing a large volume of data for personal use. Everyone understood the value of information and tried to introduce Data as a Service throughout the organization. Small and medium-sized businesses could not afford the service. This all changed with the emergence of the cloud provider market. They provided a variety of tools and techniques for data processing. Thanks to the development of this market, DaaS as a management concept grew in popularity.

What Problems Does Data as a Service Solve?

Today, DaaS systems demonstrate powerful capabilities, working with data that is limited to well-defined boundaries. This can be working with internal company data, interaction with data about the users of a separate platform, or data from a separate business area.

Among the main tasks that DaaS helps to solve are the following:

- Collection of information that can be obtained from external sources (media, social networks, mobile devices) and internal (databases or services).

- Data transport—for example, using the HTTP network protocol.

- Storing data in the file repositories of cloud technology providers.

- Data analysis is the most complex stage, in which most of the company’s primary data is transformed into useful (converted into money), and most importantly, valuable information that customers can understand.

- Delivering data to the customer in a way that is convenient for them. Usually, this is done using RESTful web-API or BI-tools.

NIX developers also have exemplary examples of DaaS implementation. Let’s take a look at one of them. In this story, the LMS (Learning Management System) acts as the business. The project provides services in the field of education and has 43 services. Each of them generates a significant amount of information that needs to be handled by separate teams of Data Engineers and Data Analysts. It is they who develop the DaaS system.

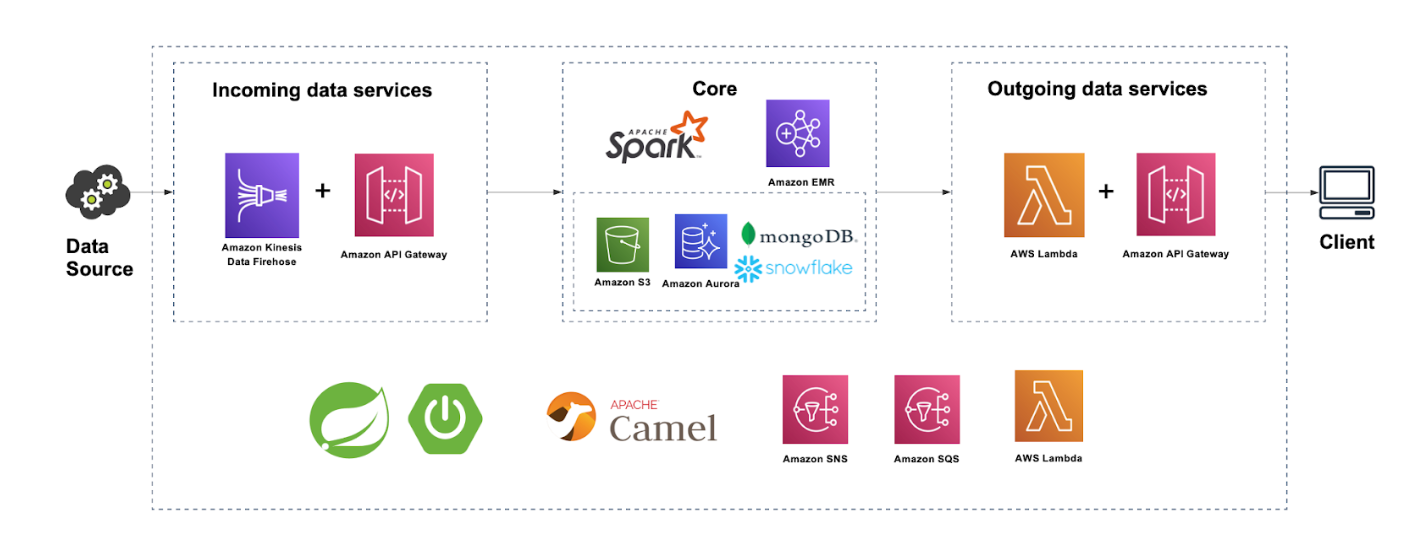

The general DaaS model is as follows. There is a Data Source from which data goes to incoming data services. These can be Spring services or Amazon API Gateway + Amazon Kinesis Data Firehose. Then the data is transmitted to the main logical block of the system (Core). There, it undergoes a primary transformation in Spring modules, if required by the business logic, and is stored in one of the following repositories:

- Amazon S3: all data in raw form and finished reports

- Amazon Aurora: temporary storage for some individual logical blocks

- MongoDB: main database

- Snowflake: Data Lake

This part of the system transforms and analyzes data using Apache Spark, which runs on Amazon EMR.

The next block includes services to deliver the results of the analysis to customers, which are the internal services. This part is implemented by Spring servers or pairs of AWS Lambda + Amazon API Gateway. There are also technologies that are used throughout the system, for example:

- Apache Camel: for connecting Spring modules with each other

- Amazon SQS + Amazon SNS: for system-wide messaging

- Amazon Lambda: for logical functions performing

This was the overall scheme of the system. Next, let’s take a closer look at one of the dozens of pipelines.

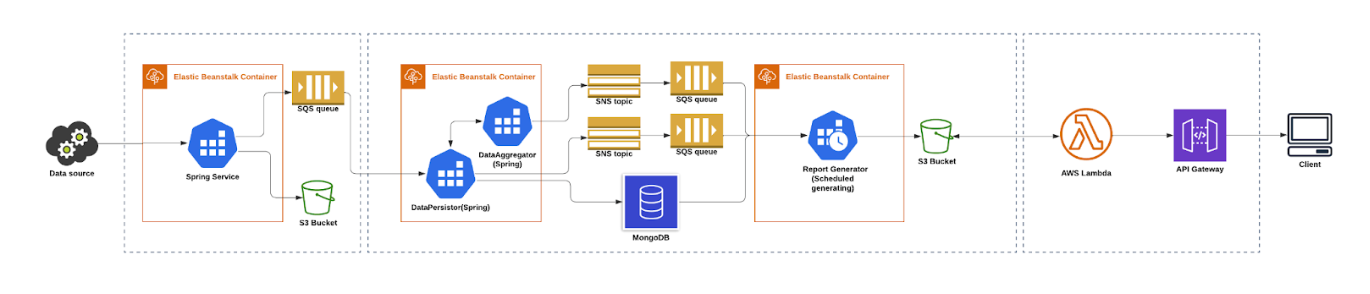

Like every LMS, here are students, teachers, and the grading process. Let’s find out how the results of a student’s assignment get into the system and how the client can benefit from it.

The data comes to us from the internal LMS service to the Spring data collection service under a pre-agreed contract. This data in raw form is stored in an S3 bucket for archiving. The message is then sent to the SQS queue with the outgoing data that was received from the source. The Core part extracts the SQS queue data and transforms it in order to store it in the MongoDB database.

Messages that the data is received and successfully saved in MongoDB go to SNS-topics, from where SQS-queues are picked up. They accumulate in Report Generator, which graphically selects the necessary data from MongoDB based on the received messages and generates a report on the student’s progress with a further plan to improve it. The report goes to an S3 bucket, from where it will be transmitted using AWS Lambda + API Gateway to the client (internal service). The latter will show it to the user (student or teacher) in a user-friendly format.

Such a report is in demand among teachers and students. Consequently, internal services benefit greatly by using it as a service and do not think about its implementation.

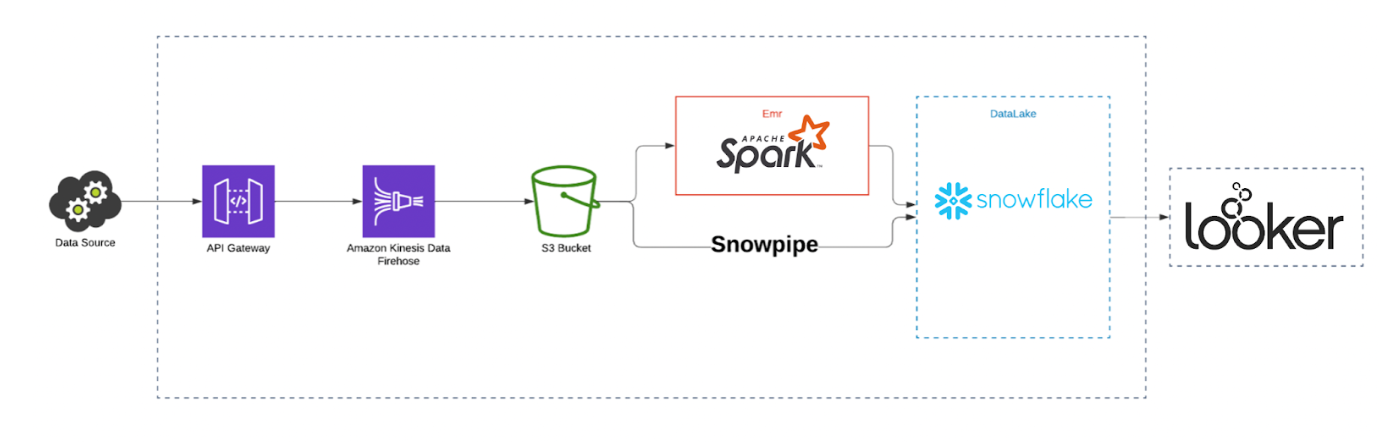

Here is another example: collecting information from all services about user activity—that’s 28 GB of data in messages every day or approximately 40,000 messages every hour. Here is a slightly different approach. Data from services go to DaaS systems via API Gateway + Amazon Kinesis Data Firehose and is stored in Amazon S3. This is the combination that makes it possible to productively process such a large amount of data.

The information then goes into Snowflake. Apache Spark is used for the previous transformation, and Snowpipe if it is not needed. In Snowflake, Data Analysts form Data Lake from the data. The results are given to customers (business analysts of the company) with the help of the BI tool Looker. This is how the DaaS system is implemented in the example of the LMS project.

Now let’s move on to the main advantages of Data as a Service, which has made this system popular.

Benefits of Data as a Service

- It’s financially beneficial

Let’s say there is a data provider that provides public data analytics. It would be much easier and cheaper to buy their results than to build a similar system on your own that would do the same process.

On the other hand, the company has many different services generating content for the team. One service needs analytics from five servers, another from six. They are interconnected. So instead of each team collecting separate analytics from all the services and duplicating data, it is better to create a team of people and delegate responsibilities. That way, each individual service will handle its own business task.

- Ease of use for the client

You don’t have to think about the tasks the team performs while working on the project. You don’t have to worry about how the data is collected, stored, processed, and analyzed; you just use the end result.

- High-quality data

Due to the fact that the data analysis is performed by a team of professionals who have specialized knowledge and information processing technology, the quality of the results is significantly higher.

Final Thoughts on Data as a Service

The data analytics industry will continue to evolve. The key players in the market—AWS, Oracle, Google, Meta, Apple, and Microsoft—will grow relentlessly. Other companies that use internal data analytics will more often implement DaaS. Even small teams will generate more and more information in the course of their activities, which will also need to be processed, so the demand for Data Engineers is likely to grow. Therefore, start mastering the DaaS model now.

If you’re in business, it’s time to lay down the architecture of a DaaS system in your company. Figure out what data your application or website will handle, and what functions the product will perform. With regard to the existing service, you should analyze how much data the application already handles and whether there is a need to bring in a separate team to handle it. These specialists are in charge of creating DaaS for your project.