4 October

How Integrate WebSockets and Not Get Burned?

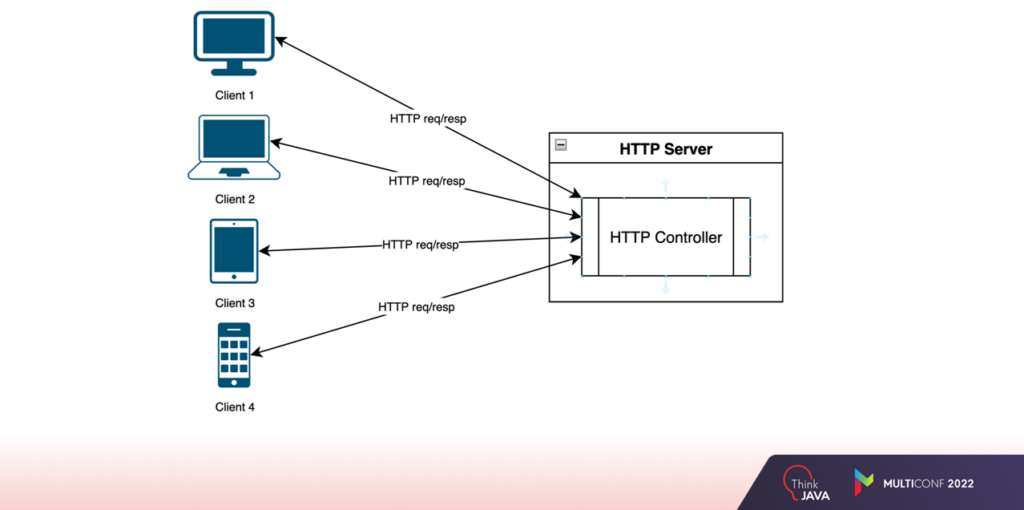

Let’s introduce a simple to-do manager. Its client (i.e. the browser window) sends a request to the server and receives a response. In order for another client to see any updates, it must make a request and receive this data. However, what do you do when a client requires users to monitor all updates on the fly? Unfortunately, classic HTTP does not solve this problem. Most likely, when you start to google some alternatives, you will come across a lot of information about web sockets.

So what are sockets, why use them, and how do web applications work in general? This article will explain all this further on with a simple example.

WebSocket is a protocol that, unlike HTTP, allows for constant two-way communication between client and server. As you can see in the illustration, in this case, the life cycle of a connection consists of three stages:

- Connection initialization — a handshake request over HTTP is made, after which the connections are updated to WebSocket.

- Data forwarding — sending a notification can take place from the client to the server and vice versa.

- Breaking the connection — both the client (for example, closing a tab) and the server (programmatically) can initiate the break.

Let’s look at how to connect it all to a classic SpringBoot application.

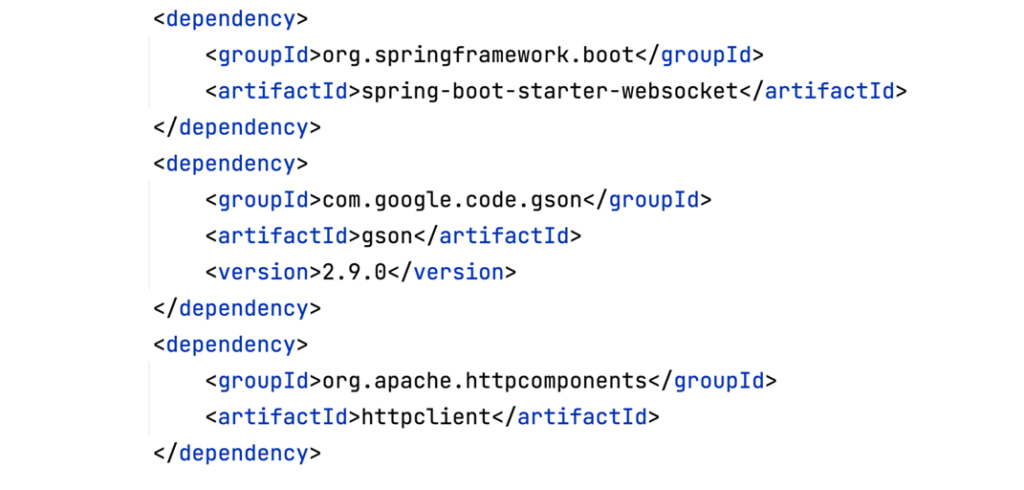

How do updates start with what? Correct — by adding the necessary dependencies.

- For ease of use, Spring has many starters in a variety of colors and sizes. Let’s add the appropriate starter, as the interest is in the sockets.

- Since JSON format data will be used, let’s add JSON from Google.

- In a few places, some utilities that make URL parsing easier will be used. Let’s add a third dependency from Apache as well.

The previous preparation is complete. Let’s move on to the Spring configuration.

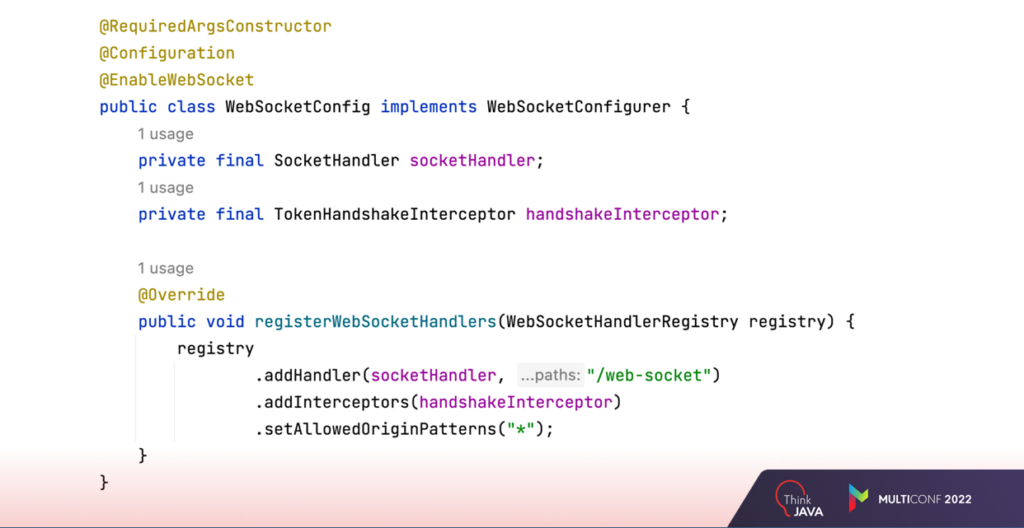

Usually, a lot of this magic happens in classes marked with the corresponding Configuration annotation. It is important to add another one: @EnableWebSockets.

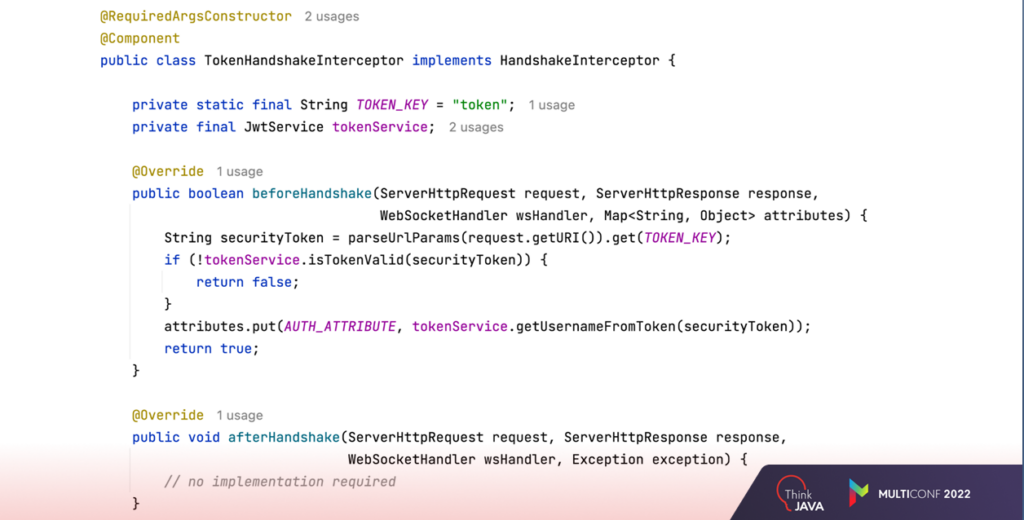

Its documentation says that the WebSocketConfigurer interface should be implemented. As you can see, the interface has only one method. Here you can add notification handlers and handshake interceptors. This way you have a validation of the association. Given that you can’t pass any parameters in the body or headers during socket initialization, the only way to identify the user is with URL parameters. This is also where you can read the token, validate it and, if it is valid, confirm the merge creation.

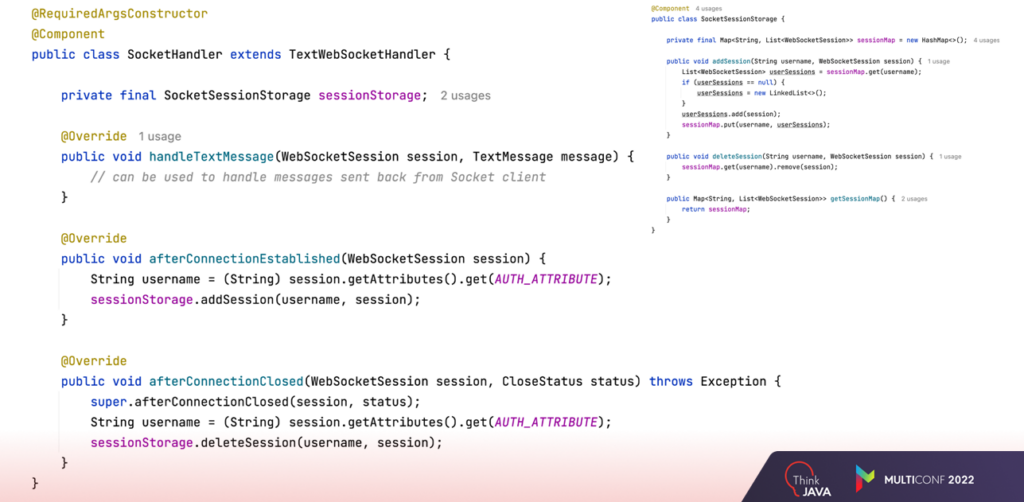

Let’s move on to the next step — the action handler. In this example, only text notifications have to be handled, so you rely on the abstract class TextWebSocketHandler. You will send notifications only from the server to the client, so you can leave the

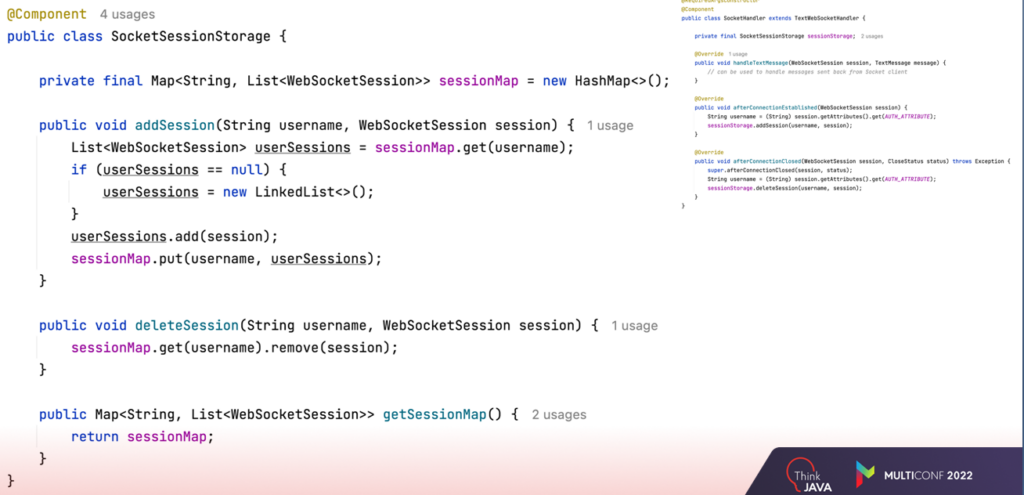

The connection repository will be a regular map, where the client is the user’s username and the value is a listing of all his open sessions (browser tabs).

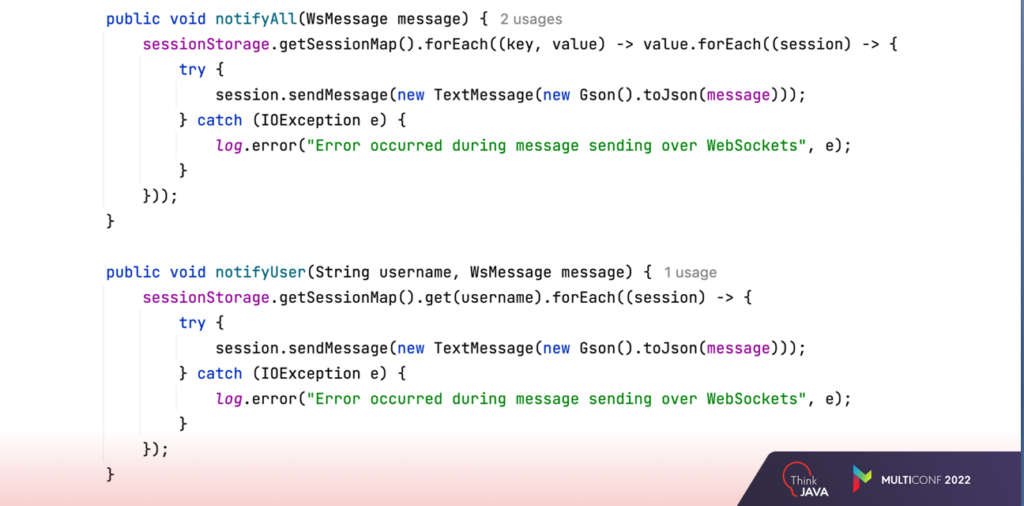

Sending notifications is now a simple task. Let’s try to implement the ability to send notifications to everyone, as well as to one individual user.

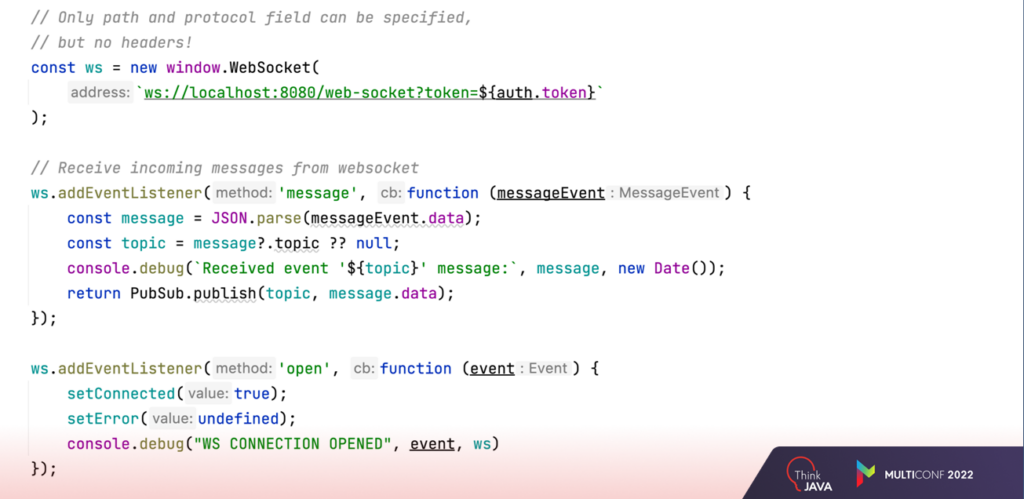

What does it look like in terms of the UI part? As it was already mentioned, when you initialize a socket, you can’t add either headers or request body. But it is always possible to pass parameters via URL, which you will need to do. Then you add all the necessary event listeners — here’s how it looks:

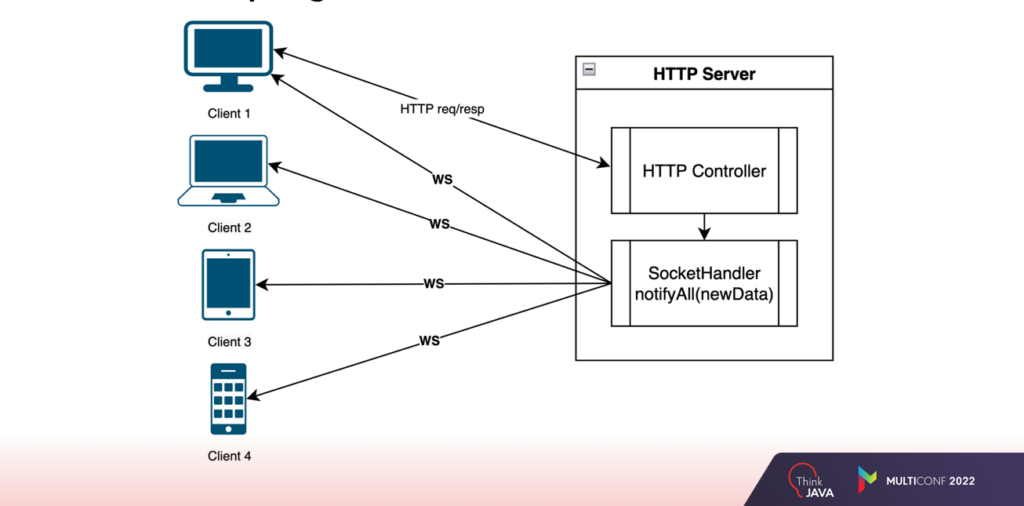

In reality, one client sends an HTTP request for updated data. Then in the controller, you call the notifyAll or notifyUser method from one of the previous schemes. This is where a bit of React magic comes in. As you can see, the data is updated in two windows at once:

Everything is cool, everything works. But if it were that easy, this article would never exist.

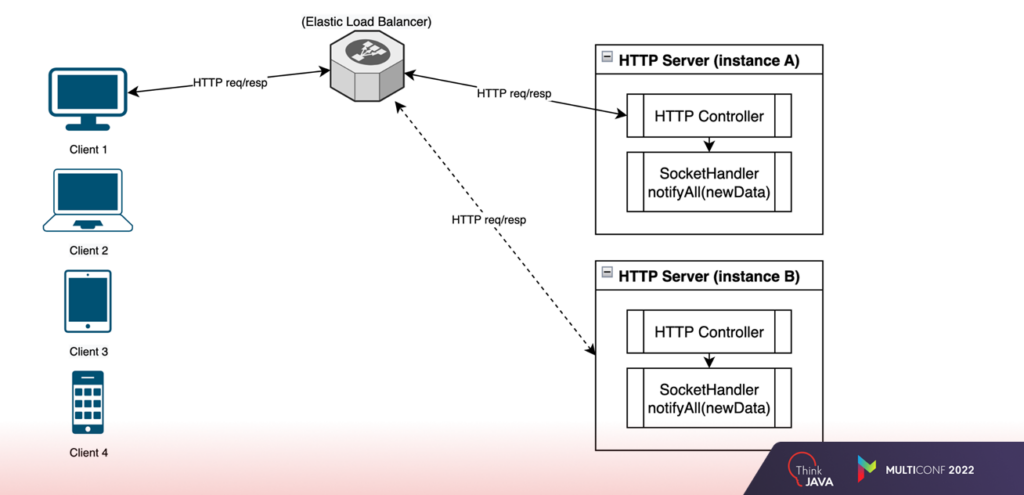

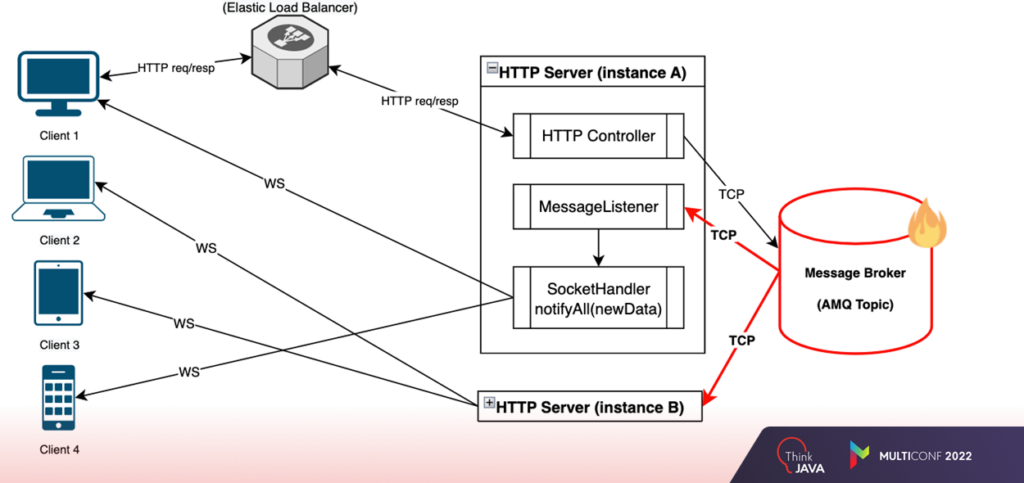

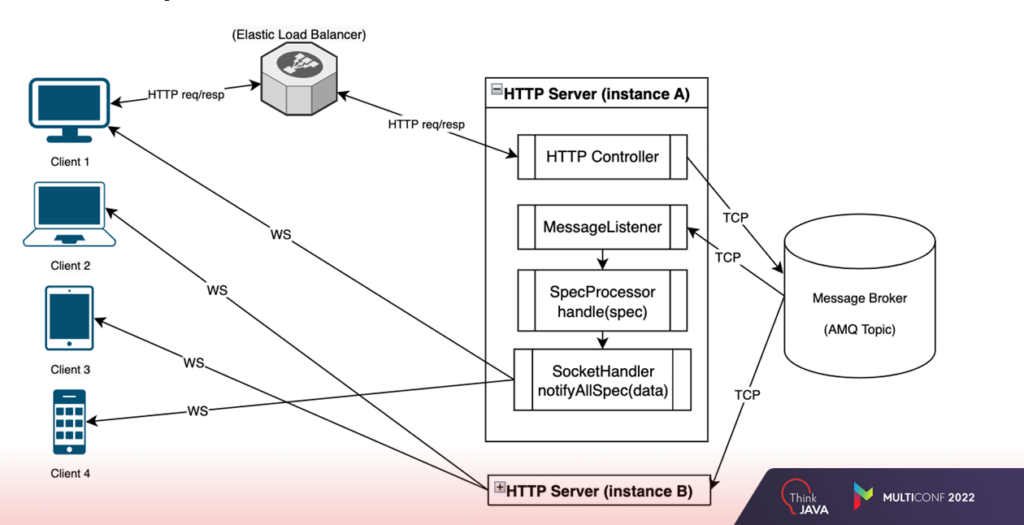

In enterprise projects, productivity is usually an important factor. However, often the same microservices can exist in multiple instances, accessed directly through Load Balancer. And depending on CPU load, RAM usage, or other parameters, new instances can be created or existing ones deleted. But how would a threaded implementation work in such a case?

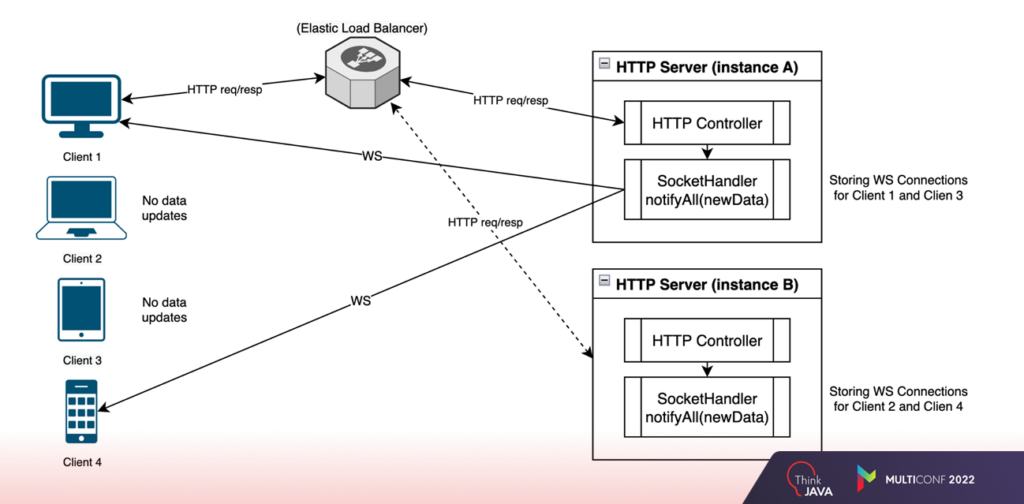

The endpoint map is saved in-memory in the middle of each instance. When you initialize WS connections, ELB chooses which service the request will be sent to, and the endpoint is stored only in it. When any service goes over the map, it’s likely that some clients will never get the notification.

To simulate a similar case, bring up the second instance of the SpringBoot application on a different port and simulate LoadBalancer behavior by adding the usual randomizer on the receiving host. In the header, you should add a small label to understand which instance you will be sending the request to. As you can see, the data in one of the windows remain unchanged.

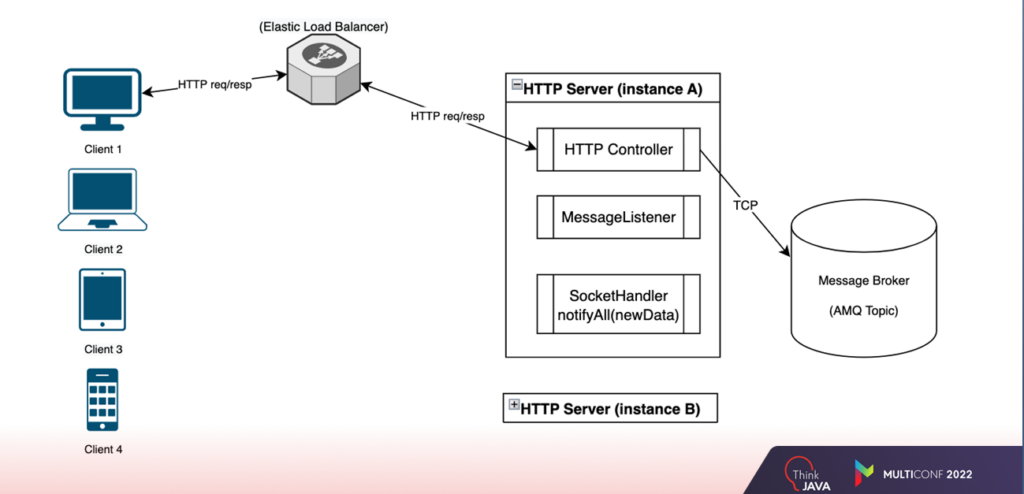

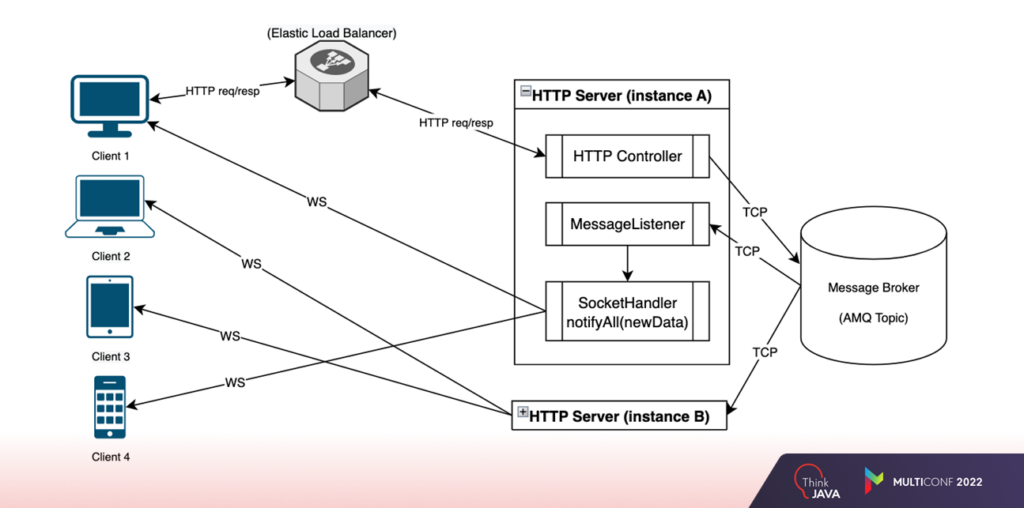

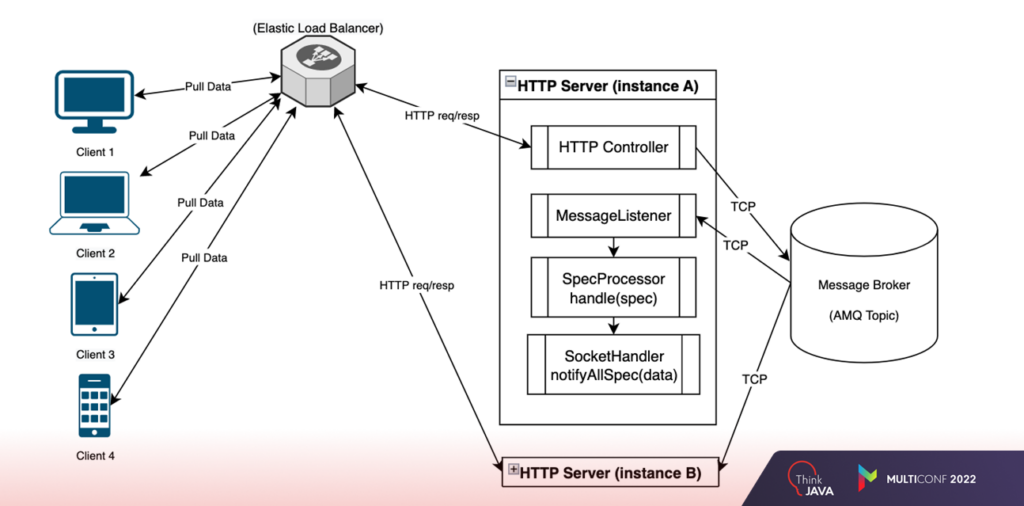

A successful way to solve the problem is to create a single event repository that will notify all of the instances that each one must send a notification to clients that are saved in themselves. Message Broker may come in handy here, but in this case, ActiveMQ was chosen for the simple reason that it is one of the most popular today. So, let’s keep coding…

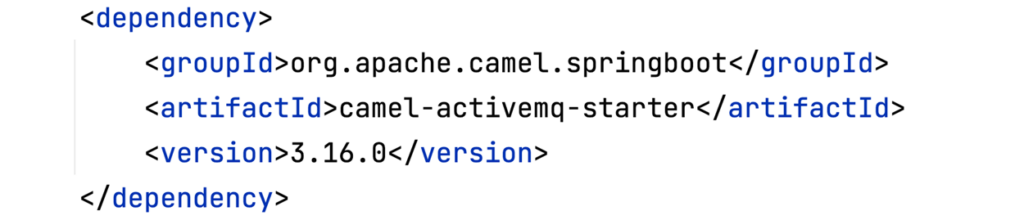

As always, you should update the dependencies first. The decision is to use such a cool thing called Apache Camel. This is an open-source, cross-platform Java framework that allows you to integrate applications in a simple and straightforward way. Camel greatly simplifies the flow of data processing. It’s especially useful if you need to build a chain of multiple handlers. It also integrates very easily with Spring.

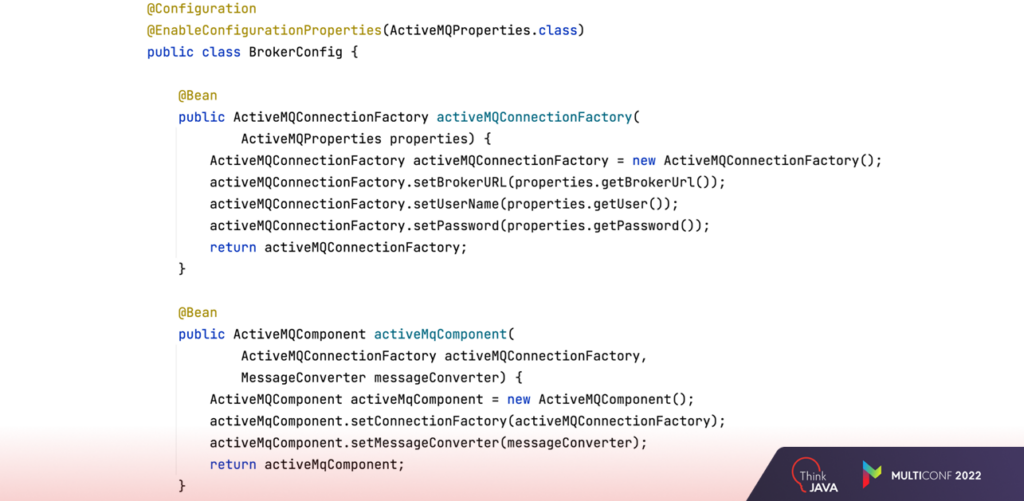

Let’s not break tradition and move on to Spring configurations. Here you need to add two bins:

- ActiveMqConnectionFactory, to which you feed properties with application.properties.

- ActiveMQComponent – A Camel component, which will further simplify the process of sending and receiving notifications from the broker.

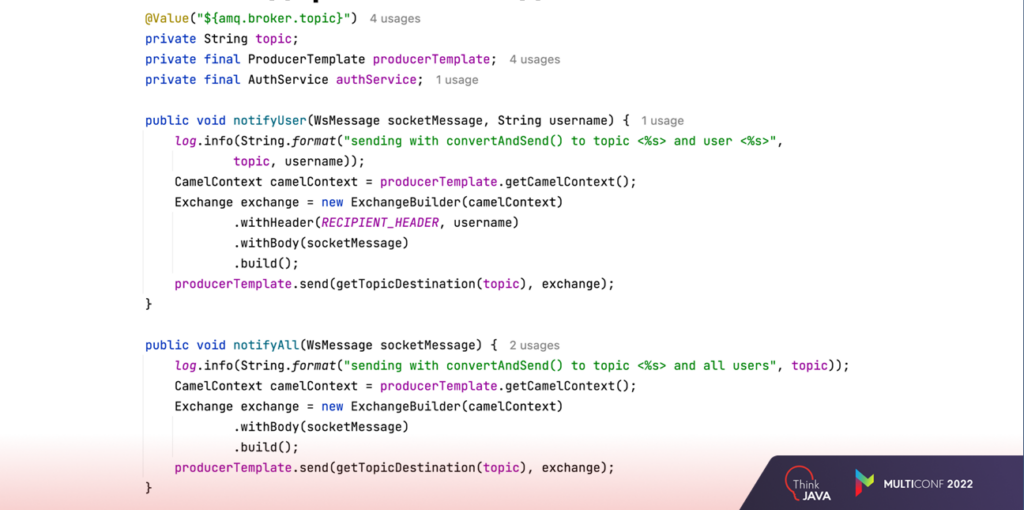

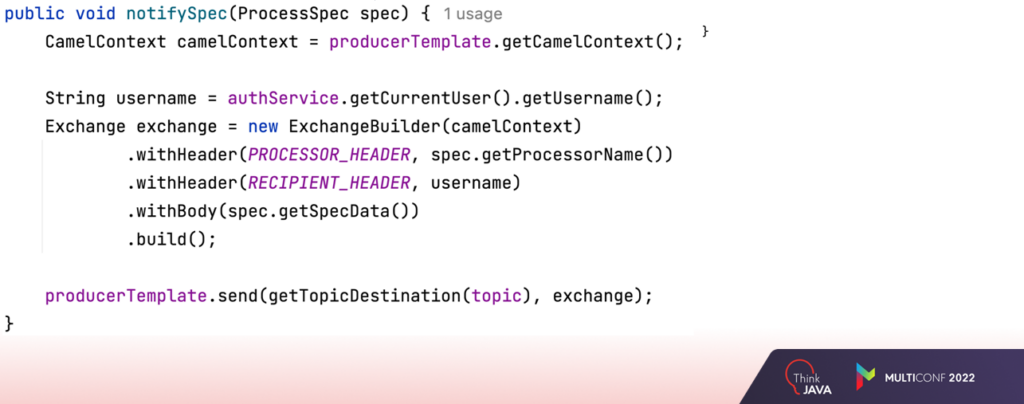

Next, you create two methods with the same signatures as before. Since it was about both sockets and Camel, you won’t dwell on it for a long time. The only thing should be stressed is the way you will pass information about the user you are sending the notification to. This is done with the Header, which can later be read when you receive a notification from the broker.

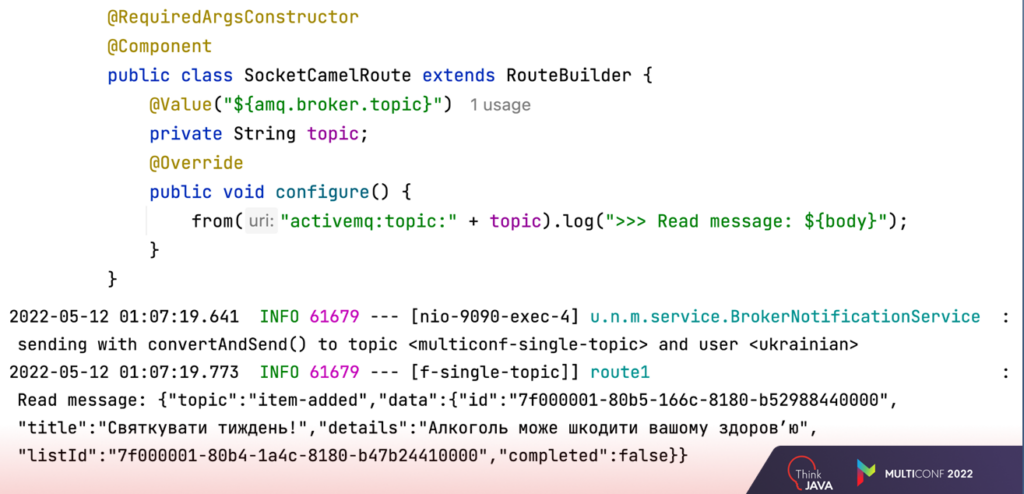

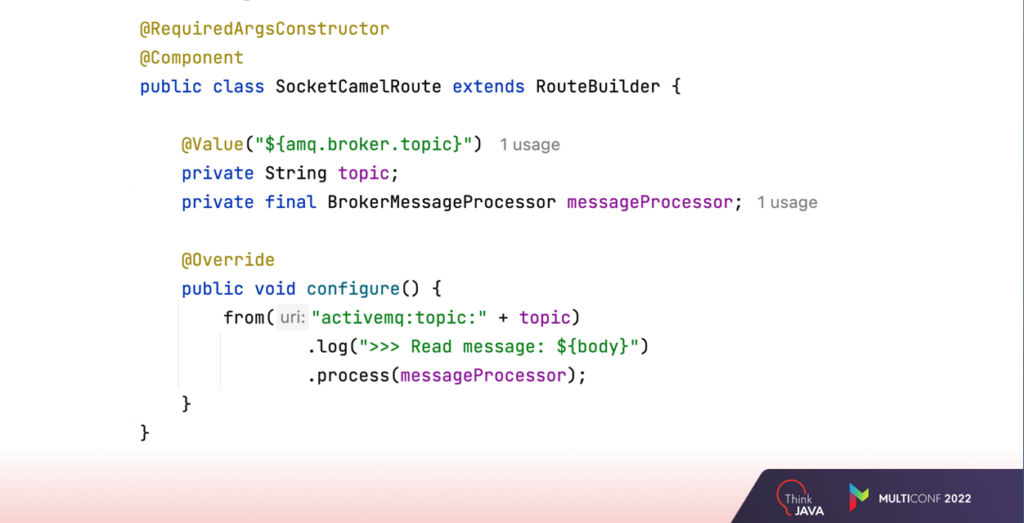

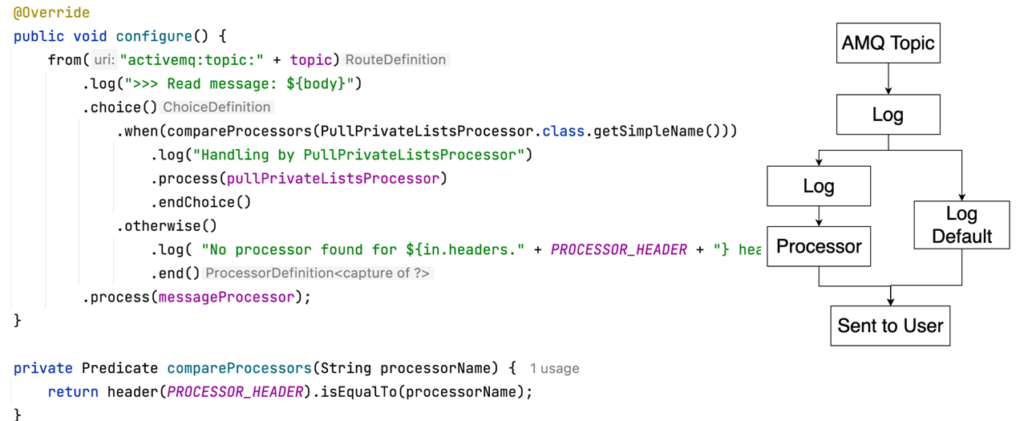

Once you have sent these notices, they must be received at each instance. This is where one of the reasons why Camel was added to the project comes in: it’s incredibly easy to use. Here is an example of reading and logging a message:

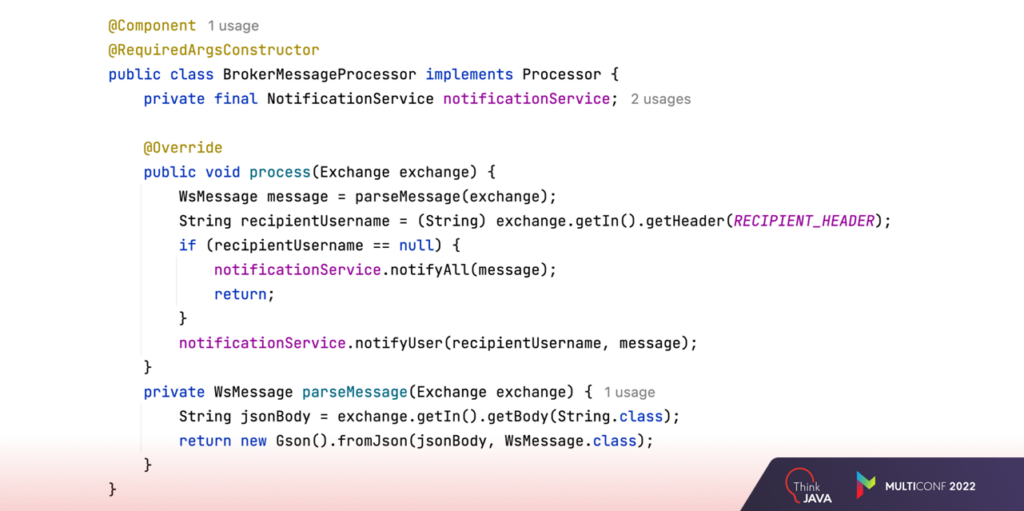

However, simply logging notifications is not enough. Next, you need to send it to all clients. To do this you should create a handler:

- Parse the notification body from plain String to JSON object and then to the Java object

- Read the header you recorded earlier

- Send it using the existing methods

- Add it to your Data Flow in a row

Finally, you can move on to the test. Here Maples are connected to two different instances. Perform an update on one and observe the updates on two at once:

Now everything works … but…

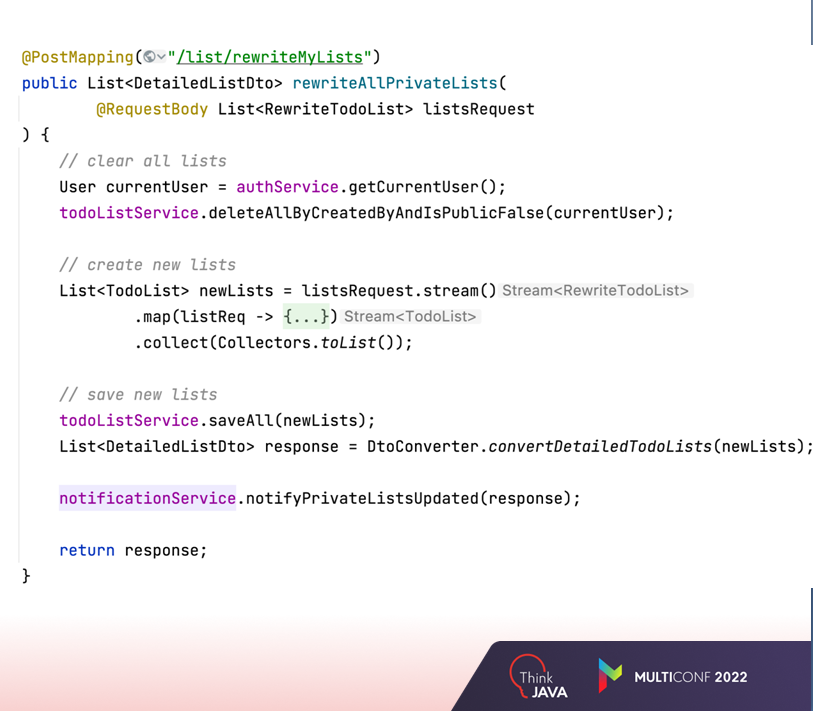

Everything will work fine when you operate with small volumes. But then again, there are a lot of systems in real projects that handle large amounts of data. Therefore, let’s also have such an endpoint, which will completely rewrite all to-do lists which concern the current user. As an input, it takes an array of these lists, the size of which is limited only by your imagination.

This way, notifications with updated lists will come from the broker and forward to all instances. However, notification brokers are not designed for this purpose. They work quickly and efficiently with small amounts of data. Otherwise, it can lead to problems with productivity and bottleneck formation. So how can you fix this?

Let’s take a refreshed approach. For this type of notification, you will send the whole updated object and information (specification) about which object has changed and how exactly. SpecProcessors on instances will then collect all the new data on their own and send it out to clients.

All you have to do is change a little bit of the data format that will be sent to the broker. Let’s create a primitive version of the specification, which includes:

- A class-name processor for processing notifications

- Parameters in the card format

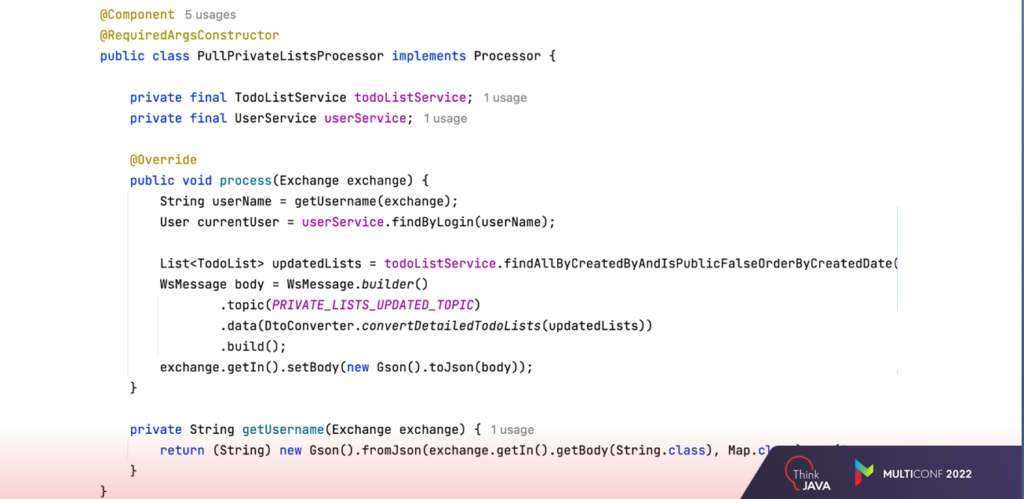

Next, you create a processor that will pull the updated data from the database, 3rd party service, or elsewhere, and pass it on.

After that, update the Data Flow in the router. If an appropriate processor is found, that is where the processing goes. Then a notification is sent to the clients via WebSockets. Here is a simplified diagram of how it works:

Let’s go back to where it started and see how it all evolved into a bidirectional system. This system can easily scale and does not put a strain on the resources:

Now when you need to implement sockets, you will think through the details in advance, and know what difficulties you may encounter and how to overcome them. And if you want to take a closer look at the code in this article, follow the links to the frontend and backend repositories: