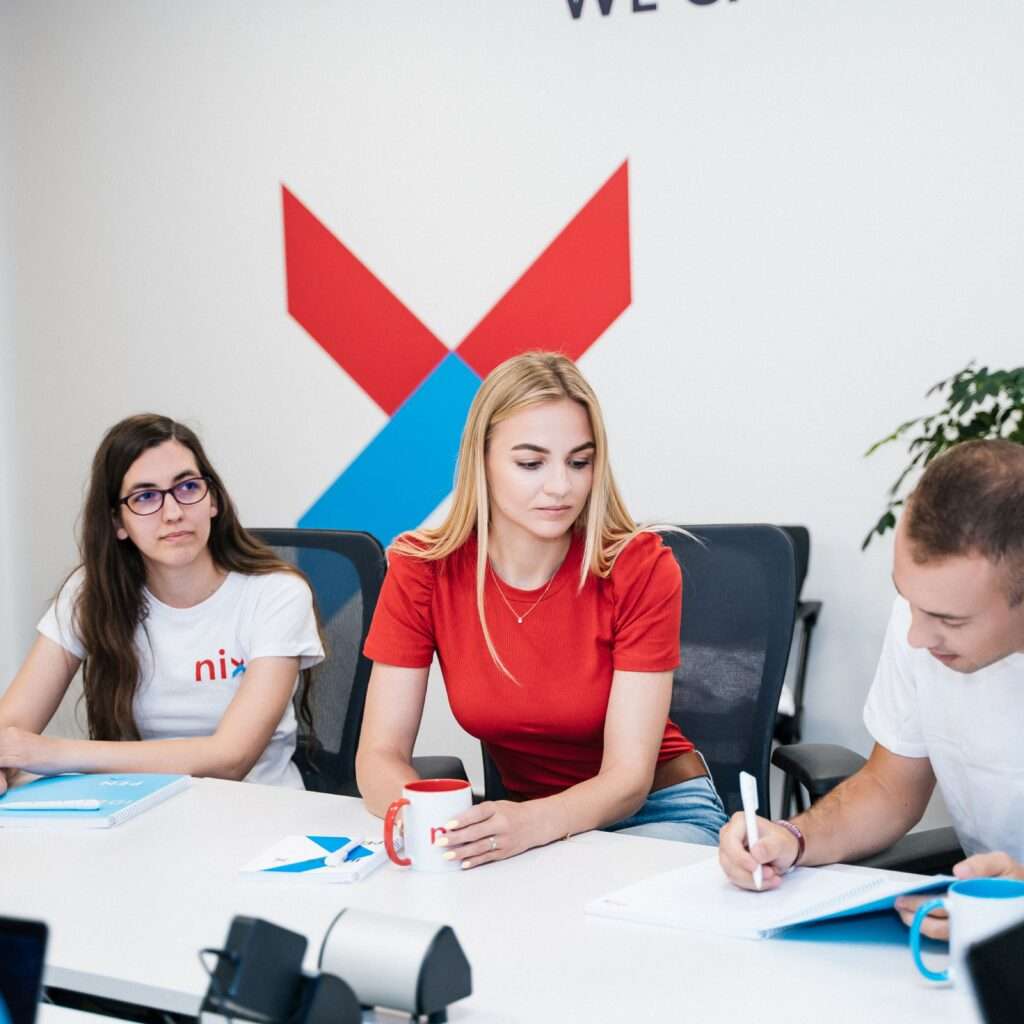

Become a part of NIX team

SEARCH VACANCIES

Open Vacancies

22 job(s) found

Hot

Hot

Hot

Hot

Hot

Hot

Hot

Hot

NIX is a global supplier of software engineering and IT outsourcing services

NIX teams collaborate with partners from different countries. Our specialists have experience in developing innovative projects from ecommerce to cloud for some of the largest companies in the world, including from the Fortune 500. The teams are focused on stable development of the international IT market, business, and their own professional skills.

Internet Services and Software

Internet Services and Software

Finance and Banking

Finance and Banking

Working with NIX you’ll get

- Stable long-term work environment and competitive salary, as soon as possible

- Every necessary tool and device in the office will be provided to comfortably perform all project tasks

- Opportunities for professional and personal growth

- Mentoring program, internal and external professional training programs;

- Paid English courses and conversation clubs

- Comfortable office in 13th district of Budapest (Vaci Greens)

- Spacious modern kitchens with professional coffee machines

- Comfortable recreation areas with game consoles and board games

- Bike parking